Summarization of textual content in Alfresco repository with Amazon Bedrock

- Alfresco Hub

- :

- ACS - Blog

- :

- Summarization of textual content in Alfresco repos...

Summarization of textual content in Alfresco repository with Amazon Bedrock

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Summarizing documents with Amazon Bedrock

Introduction

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) along with a broad set of capabilities that you need to build generative AI applications, simplifying development with security, privacy and responsible AI. Amazon Bedrock leverages AWS Lambda for invoking actions, Amazon S3 for training and validation data, and Amazon CloudWatch for tracking metrics.

With Amazon Bedrock, you can get a summary of textual content such as articles, blog posts, books, and documents to get the gist without having to read the full content. Other use cases that Amazon Bedrock can quickly get you started on are listed in the Amazon Bedrock FAQ.

Overview

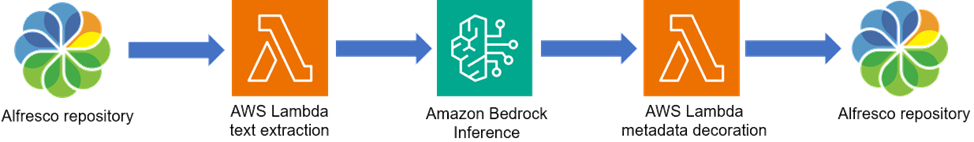

In this post, we describe the use of Alfresco Content Services ReST and Amazon Bedrock SDK APIs to provide a summary of a document’s textual content from an Alfresco repository. We assume that you are competent at developing Java software using these APIs.

We first add an aspect to the repository and apply it documents we want to get summarizations for. Next the AmazonBedrockSummarizationAlfresco lambda function is triggered that queries the repository for documents marked for summarization and extracts textual content from those documents. The prompt to Amazon Bedrock is read from the crestBedrock:generateSummary property and concatenated with the textual content and sent to Amazon Bedrock. If the length of the textual content is less than ArbitrarySynchronousBedrockInvocationLength, Amazon Bedrock’s synchronous API is used; else, Amazon Bedrock’s batch API is used. Additionally, if the length of the textual content is greater than BedrockBatchClaudePromptMaxLength, the textual content will be truncated to fit Amazon Bedrock’s allowable prompt length.

For batched Amazon Bedrock requests, a JSONL file containing the textual content is staged on S3.

A separate lambda function, AmazonBatchedBedrockSummarizationAlfresco retrieves JSONL files from S3 and processes them with Amazon Bedrock’s batch API. Amazon Bedrock writes completed batches back into S3 and the same lambda function retrieves them from S3 and writes the Amazon Bedrock generated summarizations into the crestBedock:summary attributes for the respective document nodes in the Alfresco repository.

Detailed Design

Aspect

crestBedock:GenAI

This aspect is applied onto document nodes where summarization is to be done. The description for each property in this aspect can be found within the aspect.

AWS Lambda functions (Java 17)

AmazonBedrockSummarizationAlfresco

Amazon Bedrock SDK v2.22.x is used for this function.

| Environment variable name | Purpose | Default value (Example) |

| PARAMETERS_SECRETS_ EXTENSION_HTTP_PORT | Refer AWS SM in AWS Lambda | 2773 |

| alfrescoHost | Name of host for Alfresco repository | None (acme.alfrescocloud.com) |

| alfrescoHostProtocol | Connection protocol for Alfresco repository | https (https|http) |

| awsSecrets ManagerSecretArn | ARN of secret that contains the Alfresco repository credentials to query, retrieve and update document nodes | None (arn:aws:secretsmanager: us-east-1:XXXXXXXXXX: secret:YYYY) |

| s3Uri | Location to stage textual content for asynchronous inference | None (s3://bbbbbbbb/fffff/) |

| queryJson | AFTS query used to locate documents in Alfresco repository marked for summarization | None {"query":{"language":"afts","query":"TYPE:'cm:content' AND ASPECT:'crestBedrock:GenAI' AND crestBedrock:generateSummary:'true' AND name:*"},"include":["properties"]} |

| BedrockRegion | AWS region to run Bedrock inference. See the Bedrock runtime service endpoint page for availability. | us-east-1 (us-west-2) |

| ArbitarySynchronous BedrockInvocation Length | To mitigate a HTTP client timeout issue, prompts with length text are inferred asynchronously | None (250000) |

| BedrockBatchClaude PromptMaxLength | Maximum length of textual content (without include prompt). Any characters exceeding this length are truncated. | None (599700) |

| OutputRandomizer PrefixLength | Length of string to generated to be Amazon Batch Job Id. Used for recordId specified in Amazon Bedrock guide | None (12) |

Note: Bolded variables are mandatory.

This function queries the repository for the documents ready to be used for Amazon Bedrock inference.

To fine-tune the query, modify the queryJson environment variable for your purpose.

The Alfresco repository credentials to use are kept in an AWS Secrets Manager secret. The secret is a key value pair as follows:

"SecretString": "{\"userId\":\"password\"}"

For each document returned in the search, the textual content is extracted. Currently only MIMETYPE_TEXT_PLAIN and MIMETYPE_PDF is supported. For MIMETYPE_PDF content, Apache PDFBox is used to extract textual content. The user’s prompt to the foundational model provided by Amazon Bedrock is read from the property crestBedrock:fm and appended to the end of the textual content.

If the textual content is shorter than ArbitrarySynchronousBedrockInvocationLength, the Amazon Bedrock runtime synchronous API) is used to generate the summary. If the length of the textual content is longer than BedrockBatchClaudePromptMaxLength it will be truncated before it is packaged and staged at s3Uri for asynchronous batch processing. Textual content longer than ArbitarySynchronousBedrockInvocationLength but shorter than BedrockBatchClaudePromptMaxLength is packaged and staged at the same s3Uri without truncation.

After the request is processed for both synchronous and batched invocation of Amazon Bedrock, the crestBedrock:generateSummary property is reset to false for the respective document node. For synchronous invocations, the generated summary is written to the crestBedrock:generateSummary property; for batched invocations, the batch’s Amazon Bedrock batch job ID is written.

AmazonBedrockBatchedSummarizationAlfresco

Amazon Bedrock batch inference SDK that is currently in preview; see this page for detail on getting the SDK.

| Environment variable name | Purpose | Default value (Example) |

| PARAMETERS_SECRETS_ EXTENSION_HTTP_PORT | Refer AWS SM in AWS Lambda | None (2773) |

| alfrescoHost | Name of host for Alfresco repository | None (acme.alfrescocloud.com) |

| alfrescoHost Protocol | Connection protocol for Alfresco repository | https (https|http) |

| awsSecretsManager SecretArn | ARN of secret that contains the Alfresco repository credentials to query, retrieve and update document nodes | None (arn:aws:secretsmanager: us-east-1:XXXXXXXXXX: secret:YYYY) |

| InputS3Uri | Location of batch JSONL files to use for inference | None (s3://bbb/ffff/batch/input/) |

| OutputS3Uri | Location of completed inference results | None (s3://bbb/ffff/batch/output/) |

| BedrockInvokedTagKey | To mark that an input file has been sent for inference | None (BedockInvoked) |

| OutputRandomizer PrefixLength | Length of Amazon Batch Job Id string created by AmazonBedrock SummarizationAlfresco | None (12) |

Note: Bolded variables are mandatory.

Textual content from documents that are too long for synchronous Amazon Bedrock invocations are batched for asynchronous invocation.

This function lists the JSONL objects (*.jsonl) in the InputS3Uri path and creates an Amazon Bedrock batch inference job for those that are not tagged with the BedrockInvokedTagKey.

Next, it lists the *.jsonl.out objects in the OutputS3Uri path and extracts the result of the inference. A sample of the Amazon Bedrock batch job output is such:

{

"modelInput": {<repeat of input provided> },

"modelOutput": {

"completion": "<AI generated text>",

"stop_reason": "stop_sequence",

"stop": "\n\nHuman:"

},

"recordId": "<AmazonBedrockBatchJobId>"

}The string contained in modelOutput.completion is used to update the Alfresco document node’s crestBedock:summary attribute. The Alfresco node is identified in the name of the *.jsonl.out object as the substring after OutputRandomizerPrefixLength and before the .jsonl.out extension.

WPkFsvSFlLChf80c05a8-276f-4c98-8162-86d19cec9d9c.jsonl.out

In the example above, the Alfresco nodeId is f80c05a8-276f-4c98-8162-86d19cec9d9c.

Displaying generated document summary

The business user UI checks the value of the crestBedrock:summary attribute. For each document that has a non-empty crestBedrock:summary, the UI provides a visual indication.

General guidelines for prompting Amazon Bedrock

See this page. This project places the user’s instruction read from crestBedrock: prompt after the text extracted from the document when prompting Amazon Bedrock.

Conclusion

This post walks through the salient points in the design to enable document summarization on Alfresco Content Services with Amazon Bedrock.

The sample code, software libraries, command line tools, proofs of concept, templates, scripts, or other related technology (including any of the foregoing that are provided) is provided “as is” without warranty, representation, or guarantee of any kind. All that is provided in the post is without obligation of the author or anyone to provide any support, update, enhance, or guarantee its functionality, quality, or performance. You use the technology in this post at your own risk. Neither the author nor anyone else except you is liable or responsible for any issues arising from errors, omissions, inaccuracies or your use of this post. You are solely responsible for reviewing, testing, validating and determining the suitability of this post for your own purposes. By utilizing this post, you release the author and anyone else from any liability related to your use or implementation of it. You should not use this content in your production accounts, or on production or other critical data.

You are responsible for testing, securing, and optimizing this content, such as sample code and/or template, as appropriate for production-grade use based on your specific quality control practices and standards. Deploying this content may incur AWS charges for creating or using AWS chargeable resources, such as running inference on Amazon Bedrock or programs in AWS Lambda.

Ask for and offer help to other Alfresco Content Services Users and members of the Alfresco team.

Related links:

- How to use Testcontainers with Alfresco Out-of-Pro...

- Installing Alfresco 23.2 in Ubuntu 24.04 using ZIP...

- Join the Alfresco TechQuest Hack-a-thon 2024: Inno...

- History of Alfresco Versions

- Alfresco in the OpenSearchCon Europe 2024

- Alfresco Community Edition 23.2 Release Notes

- Decommissioning of Alfresco SVN Instances

- Summarization of textual content in Alfresco repos...

- ACS containers and cgroup v2 in ACS up to 7.2

- Migrating from Search Services to Search Enterpris...

- Alfresco Community Edition 23.1 Release Notes

- Integrating Alfresco with GenAI Stack

- Achieving Higher Metadata Indexing Speed with Elas...

- ACA Extension Development Javascript-Console & Nod...

- Hyland participation in DockerCon 2023

We use cookies on this site to enhance your user experience

By using this site, you are agreeing to allow us to collect and use cookies as outlined in Alfresco’s Cookie Statement and Terms of Use (and you have a legitimate interest in Alfresco and our products, authorizing us to contact you in such methods). If you are not ok with these terms, please do not use this website.